Research | Choreographic Interfaces

This research began in 2020 upon graduation via a grant from the Harvard Graduate School of Design and continued with subsequent support from metaLAB to develop a prototype for the lab's exhibition, Curatorial A(i)gents, at the Harvard Art Museums' Lightbox Gallery. For the prototype, I collaborated with Jordan Kruguer and Maximillian Mueller, fellow researchers at metaLAB.

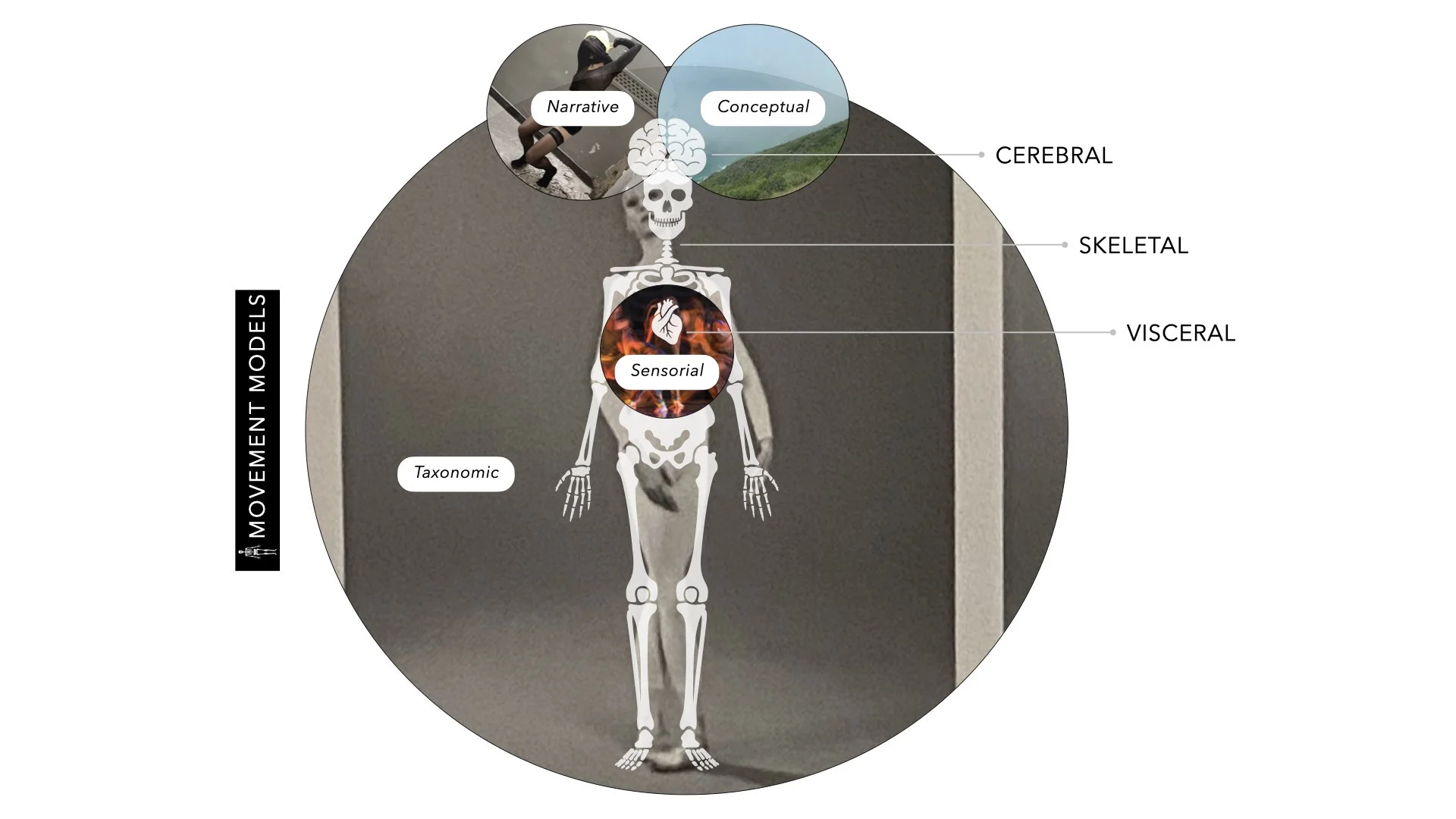

Choreography models by Lins Derry

Semantics

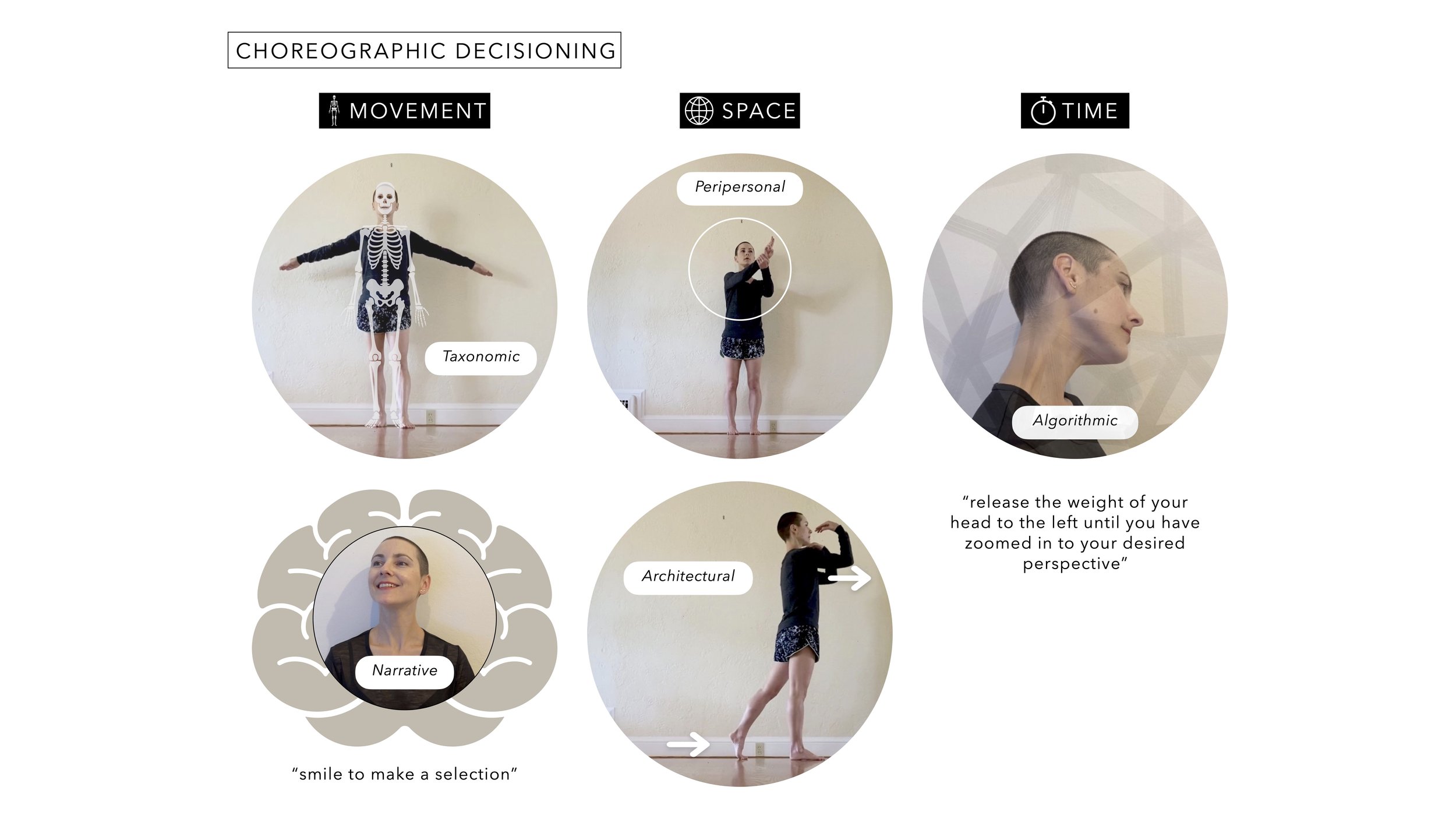

I began my research on choreographic interfaces with semantics. First, I defined one as an interface that increases the kinetic and spatial interactivity between humans and technological systems by integrating choreographic thinking into the design process. My thesis follows that by integrating choreography models into the design process, the subsequent user interactions, or body movement, can help to amplify embodied cognition in digital settings. The basis for this is that embodied cognition requires sensory feedback, including that produced by proprioception and the vestibular system − two movement-sensitive apparatus often ignored by our ocularcentric media. Next, I developed a taxonomy for observing choreographic motifs already present in interaction design, and for identifying choreography models amenable to the design process of new interactive systems. This process allowed me to take a methodological approach at incorporating my performance expertise into my design practice to produce an interdisciplinary framework for research.

Prototype

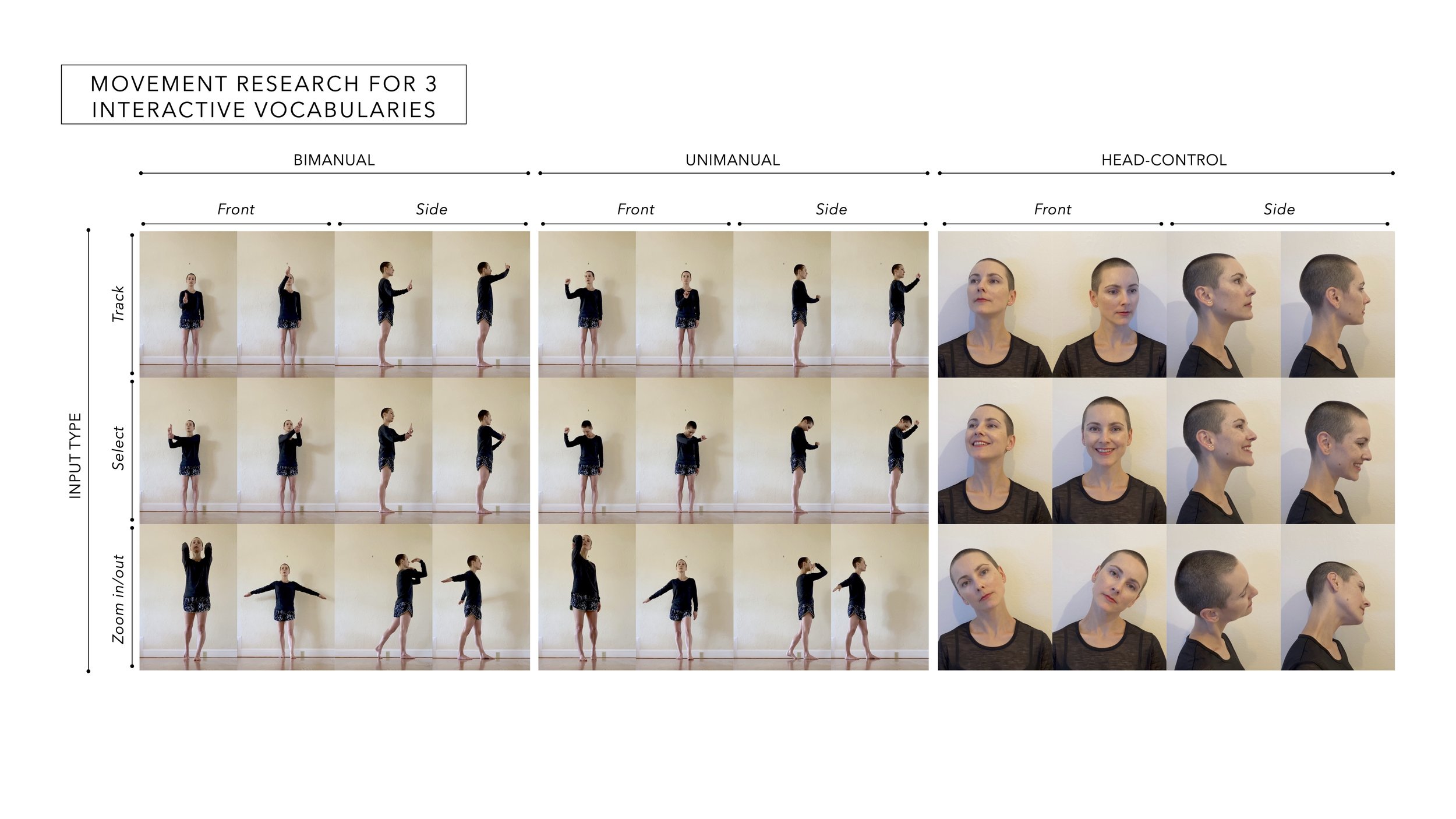

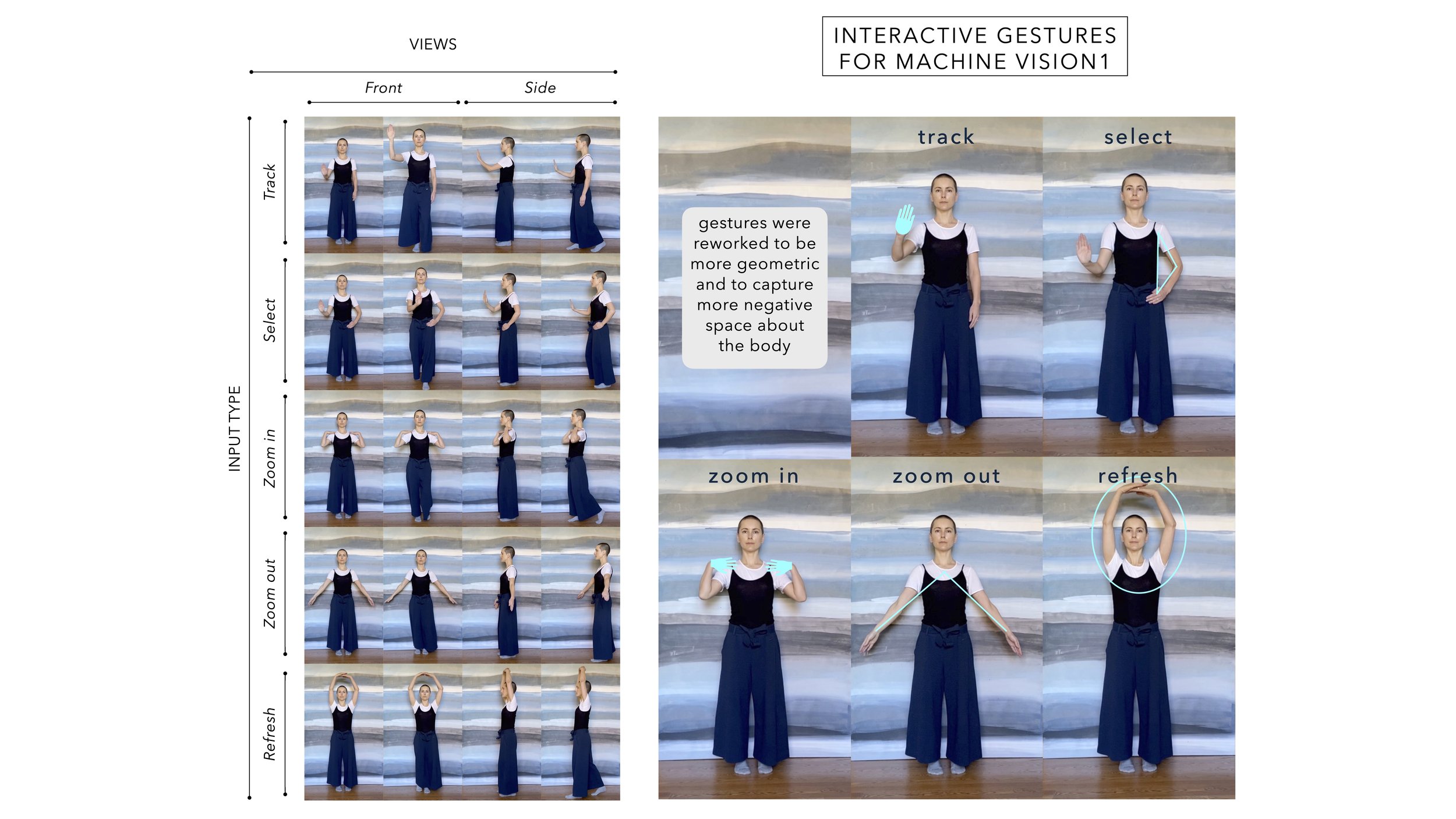

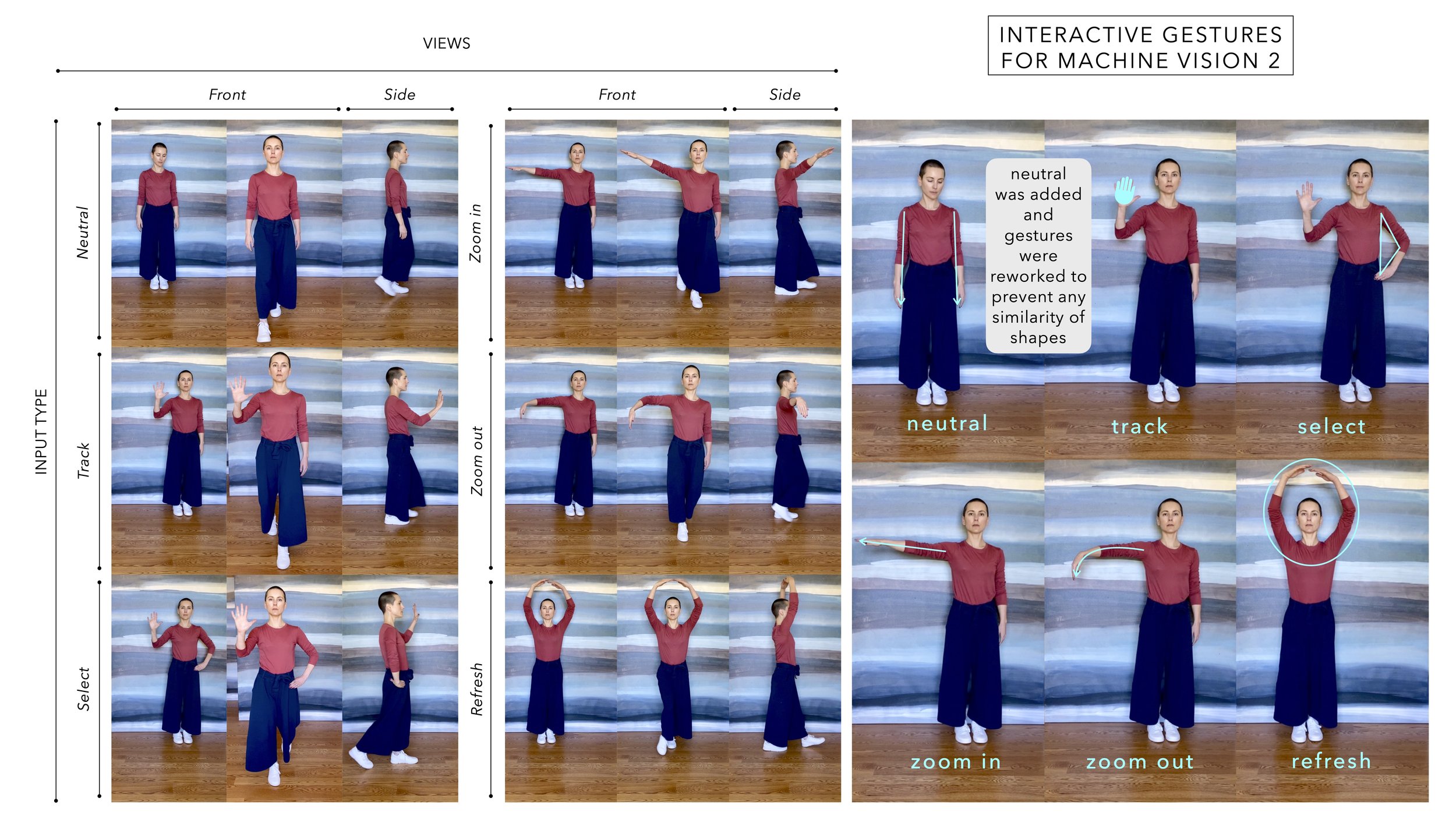

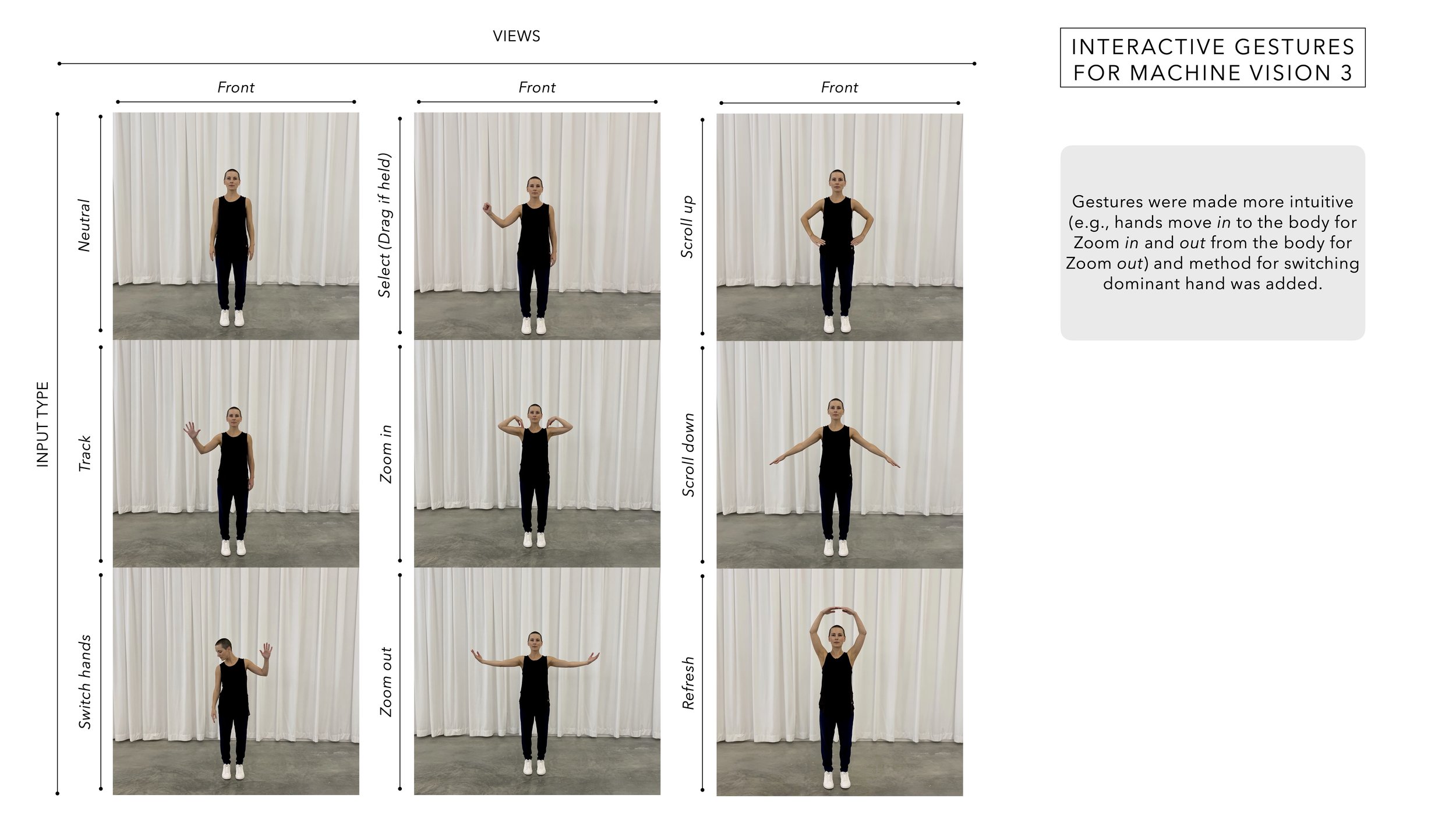

For the Harvard Art Museums prototype, we - Lins Derry & Jordan Kruguer - began with the challenge of designing a post/pandemic "touchless" version that would allow visitors to safely interact with Curatorial A(i)gents’ screen-based projects in the Lightbox Gallery. This challenge led to the idea of using machine vision to mediate the user interactions. Beginning with accessibility research, potential embodied interactions were workshopped with movement and disability experts before experimenting with how machine vision and machine learning models interpret choreographed movement. We then built and tested the architecture for supporting a simple gestural set, including zoom in/out, scroll up/down, select, span, switch hands, and refresh. The torso-scaled gestures for performing these interactions were derived from the earlier discussed taxonomy. Maximillian Mueller then joined the team to develop a sonification score for the interactive gestures.

Publications and Talks on the Choreographic Interface

Movement and Computing Conference (MOCO) 2022

In June 2022, Jordan Kruguer, Maximillian Mueller, Jeffrey Schnapp, and I will present our forthcoming paper titled Designing a Choreographic Interface During COVID-19 at MOCO.

Curatorial A(i)gents: Choreographic Panel 2022

Convening different “schools” of thought - quite literally from Harvard, MIT, and Brown, and more figuratively from design and performance studies - Hiroshi Ishii, Ozgun Kilic Afsar, Sydney Skybetter, and myself met on Zoom to talk about the choreographics of interactive technology. This recorded talk 👇 was presented as part of the Curatorial A(i)gents panel series.

Information+ Conference 2021 & Information Design Journal | Information+ Special Issue 2022

During the summer 2021, Jordan Kruguer and I joined collaborators Dario Rodighiero, Douglas Duhaime, Christopher Pietsch, and Jeffrey Schnapp to integrate the choreographic interface into data visualization project Surprise Machines. For background, Surprise Machines visualizes the entire universe of the Harvard Art Museums’ collections, with the aim of opening up unexpected vistas on the more than 200,000 objects that make them up. To accomplish these surprise encounters, “black box” algorithms are employed to shape the visualizations and a choreographic interface connects the audience’s movement with several unique views of the collection. At the Information+ Conference 2021, we presented a 4-minute lightening talk 👇 where we discussed how we have worked as a team. As a followup, our paper, Surprise Machines: Revealing Harvard Art Museums’ Image Collection, will be published in the Information Design Journal, Information+ Special Issue 2022.

Ars Electronica 2020

In September 2020, metaLAB was invited by Ars Electronica to discuss our work on Curatorial A(i)gents, during which time I had the opportunity to present my work on Second Look: gender and sentiment on show and research on choreographic interfaces. Below is my talk on the latter 👇.